Search This Blog

Thursday, 26 June 2008

Meet the Antipreneurs

They profit from the free market, but they criticize it, too. So how do they get away with it?

Wednesday, 25 June 2008

Iteration Length?

Our organisation has always used two week iterations and sometimes we do wonder if two weeks is optimum, Well the first thought was for enhancement projects two weeks fits the bill, these could be projects where the solution to implement the features is available to the team, but for a brand new project which includes a technical learning curve and innovation, three weeks might be be apt. This is subject to discussion with the teams. Although Scum recommends a 30 day iteration, 2 week iterations yield results and 3 week iterations will yield innovative results. At the end of the day both iteration lengths are within the realm of Scrum and it is a variable available to teams and the product owner, how it is varied or used as long as the goals of the release are met, is not important.

Based on previous experience with two week iterations I have made some observations which kind of remind us the strength of the two week iteration

- Two weeks is just about enough time to get some amount of meaningful development done.

- Two week iterations indicate and provide more opportunities to succeed or fail.e.g, within a 90 day release plan, 5 - 2-week iterations of development and 1 stabilisation iteration at the end make it possible to have checkpoints on the way to the release.

- The 2-week rhythm is a natural calendar cycle that is easy for all participants to remember and lines up well with typical 1 or 2 week vacation plans, simplifying capacity estimates for the team.

- Velocity can be measured and scope can be adjusted more quickly.

- The overhead in planning and closing an iteration is proportioned to the amount of work that can be accomplished in a two week sprint.

- A two week iteration allows the team to break down work into small chunks where the define/build/test cycle is concurrent. With longer iterations, there is a tendency for teams to build a more waterfall-like process.

- Margin of error for capacity planned and available is lesser in two week iterations.

Well the above is on the basis of what I have observed and may be different in your organisation.

Tuesday, 24 June 2008

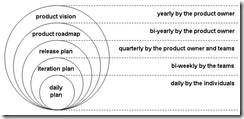

Five Levels of Planning in Scrum

In agile methods, a team gets work through iteration planning. Due to the shortness of the iteration a planning gains importance than an actual plan. The disadvantage of iteration planning when applied to projects that run for more then a few iterations or with multiple teams is that view of long term implications of iteration activities can be lost. In other words: the view of ‘the project as a whole’ is lost.

Planning activities for large-scale development efforts should rely on five levels

• Product Vision

• Product Roadmap

• Release Plan

• Sprint Plan

• Daily Commitment

Each of the five levels of planning help us address fundamental planning principles of priorities, estimates and commitments.

Friday, 20 June 2008

Principles of SOA

Although there is no official standard for SOA, the community seems to agree that there are four guiding principles to achieve SOA.

- Service boundaries are explicit.

Services expose business functionality by using well defined contracts, and these contracts fully describe a set of concrete operations and messages. Implementation details of the service are unknown, and the consumer of the service is agnostic to this, so the technology platform of the service is irrelevant to the client. What is relevant though is where the service is present so that the client can reach it to consume it.

Remoting, WCF and Web Services all support this principle. But what distinguishes WCF is its ability to answer some of the limitations in the other technologies. e.g for Remoting to be used, the underlying CLR types have to be shared between the client and the service, not so in WCF. Web Services with WSE allows a service to address this principle as well.

- Services are autonomous.

As previously mentioned services expose business functionality, to achieve this they encapsulate the business functionality, what this means is that the service should encapsulate all tiers in the functionality from database access to business tier and the contract itself. At the end of the day the service should be replaceable and movable without affecting the consumer. As a rule of thumb to achieve this any external dependencies should be eliminated during the design process. Any component changes in the service should change the version of the service as a logical unit. This is otherwise called atomicity.

- Clients and Services share contracts, not code.

Given that we said service boundaries are explicit, the contract enforces the boundary between the client and service, and this leads us to conclude that a service once published cannot be changed and all future versions should be backward compatible. There is an argument that contracts may or may not be tied to a particular technology or platform. I am unable to comment on this but seems to be only fair is as long as the service can be consumed by a client and the client can remain agnostic to the technology used to implement the service, this principle is achieved.

- Compatibility is based on policy.

Contracts define functionality offered by a service, while a policy defines the constraints imposed by the service on the consumer for attributes like security, communication protocol and reliability requirements. Prior to WCF , Web Services with WSE 3.0 helped developers achieve this, I have had the opportunity to do this on .Net 2.0 using WSE and i can say that this is pretty crucial to how your service behaves with the consumer :). At the end of the day the policy is also exposed in WSDL to the client , so that client knows the constraints imposed by the service.

As a architect/developer we may try to meet the principles listed above in our applications, but due to the influence of technology in implementation and deployment we may not be able to abide by these principles strictly, but that is probably alright. For e.g if we had three Services which encapsulate functionality for order processing, accounts and sales, we cant really have three databases for each service to work individually, we may have a reporting service which may need access to the three databases, in which case we may consolidate everything into a single database, and use schemas in the database to isolate functionality at the database level, then build a database access layer which servers the functionality required by the service. Relational data seems to be quite limiting in this respect, but would the Entity Framework allow us to address, May be may be not it is yet to be seen

Thursday, 19 June 2008

WCF Fundamentals

I have been trying to read some stuff on some WCF concepts and thought it would be useful to jot down some of the concepts outlined by Michele Leroux Bustamante in the book "Learning WCF" for reference purposes. Although it is pure theory it is useful to get an understanding of WCF. If you have used WSE before these may seem familiar ..

Messaging, Serialization and RPC

Enterprise applications communicate with each other using remote calls across process and machine boundaries. The format of the message is what determines the application's ability to communicate with other applications. RPC and XML are two common ways of achieving this. RPC calls are used to communication with objects or components across boundaries (process and machine implied). A RPC call is marshaled by converting it into a message and sent over a transport protocol such as TCP. At the destination the message is unmarshaled into a stack frame that invokes the object. Generally a proxy is used at the client and stub at the destination to marshal and unmarshal calls at either end points. This process is serialization and deserialization. Now the proxy and the stub can use only. Remoting in .Net works with this principle of messaging. Now RPC comes in many flavours, but each flavour is not compatible with another , this means it is not interoperable.. so have this applied in a interoperable way we use Web Services or WCF. WCF however wins over the two, RPC and Web Services because of its ability to operate in both RPC style messaging and Web Service messaging. In both cases the service type is used and the life time of the service type can be controlled using configuration settings defined for the service model

Services

In WCF all services use CLR types to encapsulate business functionality and for a CLR type to be qualified as a service it must implement a service contract. Marking a class or interface with an attribute ServiceContractAttribute makes a class a service type or the class implementing the interface a service type. To mark a method as a service operation (in a contract definition) , we use the attrubute OperationContractAttribute.

Hosting

WCF Services can be self hosted or on IIS like ASP.Net applications. The host is important for the service, and therefore a ServiceHost instance is associated with a Service type. We construct a ServiceHost and provide it with a service type to activate incoming messages.The ServiceHost manages the lifetime of the communication channels for the service.

Metadata

The client to understand how it should communicate with a WCF service needs data about the address, binding and contract. This is the part of the metadata of the service. Clients rely on this metadata to invoke the service. It can be exposed by the service in two ways, the Service host can expose metadata exchange endpoint to access metadata at runtime or using a WSDL. In either case clients use tools to generate proxies .

Proxies

Clients use proxies to communicate with the WCF service. A proxy is a type which represents the service contract and hides serialization details from the client. Like Web Services, in WCF if you have access to the service contract you can create a proxy using a tool. The proxy only gives information about the service and its operations, the client will still need metadata to generate endpoint configuration and there are tools available to do this.

Endpoints

When a service host opens a communication channel for a service it must expose one or more endpoints for the client to invoke. An endpoint is made of three parts Address (a URI), Binding (protocols supported), and Contract (Operations). A service host is generally provided with one or more endpoints before it opens a communication channel.

Addresses

Each endpoint for the service is represented by an Address. The Address is in the format scheme://domain:port/path.

- Scheme represents the transport protocol. Http, TCP/IP, named pipes or MSMQ are some of them, scheme for MSMQ is net.msmq, for TCP it is net.tcp and named pipes is net.pipe.

- Port is the communication port to be used for the scheme other than the default port if required, for default ports it is not required to be specified.

- Domain represents the machine name or the web domain.

- Path is usually provided as part of the address to disambiguate service endpoints. E.g net.tcp://localhost:9000 or net.msmq://localhost/QueuedServices/ServiceA

Bindings

A binding describes the protocols supported by a particular endpoint, specifically

- Transport protocol, TCP, MSMQ, HTTP or named pipes.

- Message Encoding format , XML or binary.

- Other protocols for messaging, security and reliability, plus anything else that is custom. Several predefined bindings are available as standard bindings in WCF, however these only cover some common communication scenarios.

Channels

Channels facilitate communication between the client and the WCF service. The ServiceHost creates a channel listener for each end point which generates a communication channel. The proxy on the client creates a channel factory which generates a communication channel for the client. Both these channels should be compatible for communication to be successful between the client and the service.

Behaviors

Behaviors are local to the service or the client and are used to control features such as exposing metadata, authorisation, authentication or transactions etc.

Wednesday, 18 June 2008

SQL Server 2008 RC0 is out

RC0 SQL Server 2008 for some reason seems to be quietly done, didn't realise it was out, it is available to download at SQL Server 2008 RC

PS: SQL Server 2008 RC0 will automatically expire after 180 days

Sunday, 15 June 2008

Enterprise Application Integration

I have been recently thinking about integration scenarios for different applications and how to take decisions on the way two applications should be integrated.. Infact my question is what are the factors i would consider to make a decision on how two applications will integrate. On the same note as much as there is a benefit that comes out of integration of two applications more often than not there is a resulting consequence based on how the applications integrated

Should the two applications be loosely coupled? This is first one that comes to my mind ,on most occasions the answer is yes it should be loosely coupled, the more loosely coupled the two applications are the more opportunities they have to extend there functionality without affecting each other and the integration itself. If tightly coupled its obvious that the integration breaks when applications change.

Simplicity, if we as developers minimize code involved in the integration of the applications, it becomes easily maintainable and provides better integration.

Integration technology Different integration techniques require varying amounts of specialized software and hardware. These special tools can be expensive, can lead to vendor lock-in, and increase the burden on developers to understand how to use the tools to integrate applications.

Data format , The format in which data is exchanged is important, and it should be borne in mind that the format should be compatible with an independent translator other than the integrating applications itself so that at some point these applications are also able to talk to other applications should there be a need. A related issue is data format evolution and extensibility and how the format can change over time and how that will affect the applications.

Data timeliness , we need to minimize the length of time between when one application decides to share some data and other applications have that data. Data should be exchanged in small chunks, rather than large sets. Latency in data sharing has to be factored into the integration design; the longer, the more opportunity for shared data to become stale, and the more complex integration becomes.

Data or functionality, Integrated applications may also wish to share functionality such that each application can invoke the functionality in the others. But this may have significant consequences for how well the integration works.

Asynchronicity, This is a aspect developers start realising after implementation and performance tests fail, By default we think and code synchronously, This is especially true of integrated applications, where the remote application may not be running or the network may be unavailable, the source application may wish to simply make shared data available or log a request.

Friday, 13 June 2008

ASP.Net and COM Interop

At some point in time we have had to use a COM component in .Net applications as part of migrating legacy code to new technologies, but kind of puts me off is doing it without knowing why we are doing it or at the least how the legacy component works. Visual Studio makes it so easy to use COM components by doing all the work for you under the covers. In this article i would like to see how COM is used and get into some of the aspects of dealing with COM.. in the .Net world. A COM component can be consumed in .Net either by Early binding or Late binding.

Early binding

This is where type information about the COM component is available to the consumer at design time to the consumer, e.g most common thing we do is reference it in VS.Net and the studio runs tlbimp.exe to generate the Interop assembly for consumption by .Net code, this is because .Net needs meta data information about the assembly before hand. Another reason this is most prefered way of consuming COM objects is that Early binding is much faster in its access than Late bindinig, In addition to this developers are able to use the COM object as if it was another .Net object by creating an instance using the new keyword.

Late Binding

Information of the COM component is not known until code is executed or in the runtime.A classic example of this is using HttpServerUtility.CreateObject used in ASP pages You will need to pass the component program ID of the COM component to this method.. Now it is important to consider how COM components are instantiated. In windows XP and 2000 how the COM component is instantiated depends on how the threading model of the COM compoenent is marked in the registry as Free, Apartment, Neutral or Both.

Components marked Free

When we call a COM component marked as free in ASP.Net the instance is on the same threadpool thread that the ASP.Net page started running. The ASP.Net thread pool is initialised as a Multi Threaded Apartment (MTA) and since the COM component is marked as Free, no thread switch is necessary and performance penalty is minimal

Components marked Apartment

Traditionally, business COM components that are called from either ASP have been marked Apartment. The single-threaded apartment (STA) threading model is not compatible with the default threading model for the ASP.NET thread pool, which is MTA. As a result, calling a native COM component marked Apartment from an ASP.NET page results in a thread switch and COM cross-apartment marshalling.

Under stress, this presents a severe bottleneck. To work around this issue, a new directive called ASPCompat was introduced to the System.Web.UI.Page object.

How ASPCompat Works

The ASPCompat attribute minimizes thread switching due to incompatible COM threading models. More specifically, if a COM component is marked Apartment, the ASPCompat = "true" directive on an ASP.NET page runs the component marked Apartment on one of the COM+ STA worker threads. Assume that you are requesting a page called UnexpectedCompat.aspx that contains the directive ASPCompat ="true". When the page is compiled, the page compiler checks to see if the page requires ASPCompat mode. Because this value is present, the page compiler modifies the generated page class to implement the IHttpAsyncHandler interface, adds methods to implement this interface, and modifies the page class constructor to reflect that ASPCompatMode will be used.

The two methods that are required to implement the IHttpAsyncHandler interface are BeginProcessRequest and EndProcessRequest. The implementation of these methods contains calls to this.ASPCompatBeginProcessRequest and this.ASPCompatEndProcessRequest, respectively.

You can view the code that the page compiler creates by setting Debug="true" in the <compilation> section of the web.config or machine.config files.

The Page.ASPCompatBeginProcessRequest() method determines if the page is already running on a COM+ STA worker thread. If it is, the call can continue to execute synchronously. A more common scenario is a page running on a .NET MTA thread-pool thread. ASPCompatBeginProcessRequest() makes an asynchronous call to the native function ASPCompatProcessRequest() within Aspnet_isapi.dll.

The following describes what happens when invoking COM+ in the latter scenario:

1. The native ASPCompatProcessRequest() function constructs an ASPCompatAsyncCall class that contains the callback to the ASP.NET page and a context object created by ASP.NET. The native ASPCompatProcessRequest() function then calls a method that creates a COM+ activity and posts the activity to COM+.

2. COM+ receives the request and binds the activity to an STA worker thread.

3. Once the thread is bound to an activity, it calls the ASPCompatAsyncCall::OnCall() method, which initializes the intrinsics so they can be called from the ASP.NET (this is similar to classic ASP code). This function calls back into managed code so that the ProcessRequest() method of the page can continue executing.

4. The page callback is invoked and the ProcessRequest() function on that page continues to run on that STA thread. This reduces the number of thread switches required to run native COM components marked Apartment.

5. Once the ASP.NET page finishes executing, it calls Page.ASPCompat EndProcessRequest() to complete the request